Collaborative Assistants For The Society

- 22 views

CASY + {Hack@Home} will take place on October 15, 2021 and

CASY 2.0 will take place on February 11, 2022

The event will be free-to-attend once registered and is intended to promote the ethical usage of digital assistants in society for daily life activities.

See casy.aiisc.ai or the event page for more information.

DISSERTATION DEFENSE

Department of Computer Science and Engineering

University of South Carolina

Author : Nare Karapetyan

Advisor : Dr. Ioannis Rekleitis

Date : Oct 11, 2021

Time : 12:30pm

Place : Meeting Room 2267, Innovation Center

Abstract

This thesis is motivated by real world problems faced in aquatic environments. It addresses the problem of area coverage path planning with robots - the problem of moving an end-effector of a robot over all available space while avoiding existing obstacles. The problem is considered first in a 2D environment with a single robot for specific environmental monitoring operations, and then with multi-robot systems which is known to be an NP-complete problem. Next we tackle the coverage problem in 3D space - a step towards underwater mapping of shipwrecks, underwater structures, and monitoring of coral reefs.

The first part of this thesis leverages human expertise in river exploration and data collection strategies to automate and optimize environmental monitoring and surveying operations using autonomous surface vehicles (ASVs). In particular, three deterministic algorithms for both partial and complete coverage of a river segment are proposed, providing varying path length, coverage density, and turning patterns. These strategies resulted in increases in accuracy and efficiency compared to human performance. The proposed methods were extensively tested in simulation using maps of real rivers of different shapes and sizes. In addition, to verify their performance in real world operations, the algorithms were deployed successfully on several parts of the Congaree River in South Carolina, USA, resulting in a total of more than 35km of coverage trajectories in the field.

In large scale coverage operations, such as marine exploration or aerial monitoring, single robot approaches are not ideal. The coverage might take not only too long during such operation, but the robot might run out of battery charge before completing coverage. In such scenarios, multi-robot approaches are preferable. Furthermore, several real world vehicles are non-holonomic, but can be modeled using Dubins vehicle kinematics. The second part of this thesis focuses on environmental monitoring of aquatic domains using a team of Autonomous Surface Vehicles (ASVs) that have Dubin vehicles constraint. It is worth noting that both multi-robot coverage and Dubins vehicle coverage are NP-complete problems. As such, we present two heuristics methods based on a variant of the traveling salesman problem---k-TSP---formulation and clustering algorithms that efficiently solve the problem. The proposed methods are tested both in simulations and with a team of ASVs operating on a 200$m$ x 200$m$ lake area to assess their ability to scale and applicability in the real world.

Finally, in the third part, a step towards solving the coverage path planning problem in the 3D environment for surveying underwater structures, employing vision-only navigation strategies, is presented. Given the challenging conditions of the underwater domain it is very complicated to obtain accurate state estimates reliably. Consequently, it is a great challenge to extend known path planning or coverage techniques developed for aerial or ground robot controls. In this work we are investigating a navigation strategy utilizing only vision to assist in covering a complex underwater structure. We propose to use a navigation strategy akin to what a human diver will execute when circumnavigating around a region of interest, in particular when collecting data from a shipwreck. The focus of this work is a step towards enabling the autonomous operation of light-weight robots near underwater wrecks in order to collect data for creating photo-realistic maps and volumetric 3D models while at the same time avoiding collisions. The proposed method uses convolutional neural networks to learn the control commands based on the visual input. We have demonstrated the feasibility of using a system based only on vision to learn specific strategies of navigation with 80% accuracy on the prediction of control commands changes. Experimental results and a detailed overview of the proposed method are discussed.

Meeting Location:

Storey Innovation Center 1400

Live Virtual Meeting Link:

Speaker's Bio: Jae-sun Seo is an Associate Professor at the School of ECEE at Arizona State University. His research interests include efficient hardware design of machine learning / neuromorphic algorithms and integrated power management. He was a recipient of the IBM Outstanding Technical Achievement Award (2012), NSF CAREER Award (2017), and Intel Outstanding Researcher Award (2021).

Talk Abstract: Artificial intelligence (AI) and deep learning have been successful across many practical applications, but state-of-the-art algorithms require an enormous amount of computation, memory, and on-/off-chip communication. To bring expensive algorithms to a low-power processor, a number of digital CMOS ASIC solutions have been previously proposed, but limitations still exist on memory access and footprint. To improve upon the conventional row-by-row operation of memories, “in-memory computing (IMC)” designs have been proposed, which performs analog computation inside memory arrays by asserting multiple or all rows simultaneously. In this talk, we will present circuit-level, system-level, and algorithm-level techniques for designing SRAM IMC-based AI systems with high energy efficiency (compared to digital ASIC), high accuracy (similar to software baseline), and enhanced robustness (against adversarial attacks).

Tomorrow, at the Seminar in Advances in Computing, we have an exciting talk by Dr. Laura Boccanfuso who is the founder and CEO of Van Robotics. The talk will be focused on AI- and robot-assisted learning for students. Dr. Boccanfuso is a UofSC alumna who received her doctoral degree under the supervision of Dr. Jason O'Kane.

Meeting Location:

Storey Innovation Center 1400

BIO: Dr. Laura Boccanfuso is founder and CEO of Van Robotics, a social robotics company headquartered in Columbia, SC that builds AI-enabled tutors. Laura received her PhD in Computer Science and Engineering from the University of South Carolina and later worked in the Yale University Social Robotics Lab and Child Study Center a Postdoctoral researcher and Associate Research Scientist. She completed the Yale Venture Creation Program in 2016, officially launched the company in 2017 and was selected for the Techstars Accelerator in Austin later that year. Laura’s work focuses on wholistic robot-assisted learning, incorporating best practices in human-robot interaction that leverage sound educational pedagogy, cognitive and learning science and machine learning techniques to accelerate learning.

TALK ABSTRACT: Existing AI-enabled applications that personalize learning for students primarily focus on assessing which skill(s) the student has mastered, which skills they have not yet mastered, and the optimal learning trajectory, or ordered series of skills building lessons, that will accelerate skills mastery for the individual. However, the process of learning effectively is not confined to a well-defined set of cognitive leaps that result in skills mastery. Instead, effective learning is very often driven by a set of personal factors that includes individual cognitive ability, mental focus, social and emotional intelligence, and intrinsic or extrinsic motivation. In this presentation, we will explore some methodologies for actively collecting and processing measures of these traits and conditions, and discuss some of the constraints that must be addressed in order to implement an effective multi-modal approach for highly personalized learning.

See this ACM Event Page for details.

This Thursday I will give an introduction to the capacity of Django to make professional websites with ease. I'll explain the basics of starting your own site, how Django works, and finally go into some of the work I am doing with Django. Since this will involve a mix of Python, JavaScript, HTML, and CSS, we will also briefly touch on anything that may be new.

Sep 21, Tuesday, 10:00-11:15 am

Blackboard link: https://us.bbcollab.com/guest/f567247c101145cebc6eaa937af2cecd

Sep 23, Tuesday, 10:00-11:15 am

Blackboard link: https://us.bbcollab.com/guest/f567247c101145cebc6eaa937af2cecd

On campus class at Seminar Room, AI Institute, 1112 Greene St, Columbia (5th Floor; Science & Technology Building)

Speaker Bio:

Dr. Diptikalan Saha (Dipti) is a Senior Technical Staff Member and manager of Reliable AI team in Data&AI department of IBM Research at Bangalore. His research interest includes Artificial Intelligence, Natural Language Processing, Knowledge representation, Program Analysis, Security, Software Debugging, Testing, Verification, and Programming Languages. He received a Ph.D. degree in Computer Science from the State University of New York at Stony Brook his B.E. degree in Computer Science and Engineering from Jadavpur University. His group’s work on Bias in AI Systems is available through AI OpenScale in IBM Cloud as well as through open-source AI Fairness 360.

Related Material

Sep 21, Tuesday, 10:00-11:15 am

Blackboard link: https://us.bbcollab.com/guest/f567247c101145cebc6eaa937af2cecd

Sep 23, Tuesday, 10:00-11:15 am

Blackboard link: https://us.bbcollab.com/guest/f567247c101145cebc6eaa937af2cecd

On campus class at Seminar Room, AI Institute, 1112 Greene St, Columbia (5th Floor; Science & Technology Building)

Speaker Bio:

Dr. Diptikalan Saha (Dipti) is a Senior Technical Staff Member and manager of Reliable AI team in Data&AI department of IBM Research at Bangalore. His research interest includes Artificial Intelligence, Natural Language Processing, Knowledge representation, Program Analysis, Security, Software Debugging, Testing, Verification, and Programming Languages. He received a Ph.D. degree in Computer Science from the State University of New York at Stony Brook his B.E. degree in Computer Science and Engineering from Jadavpur University. His group’s work on Bias in AI Systems is available through AI OpenScale in IBM Cloud as well as through open-source AI Fairness 360.

Related Material

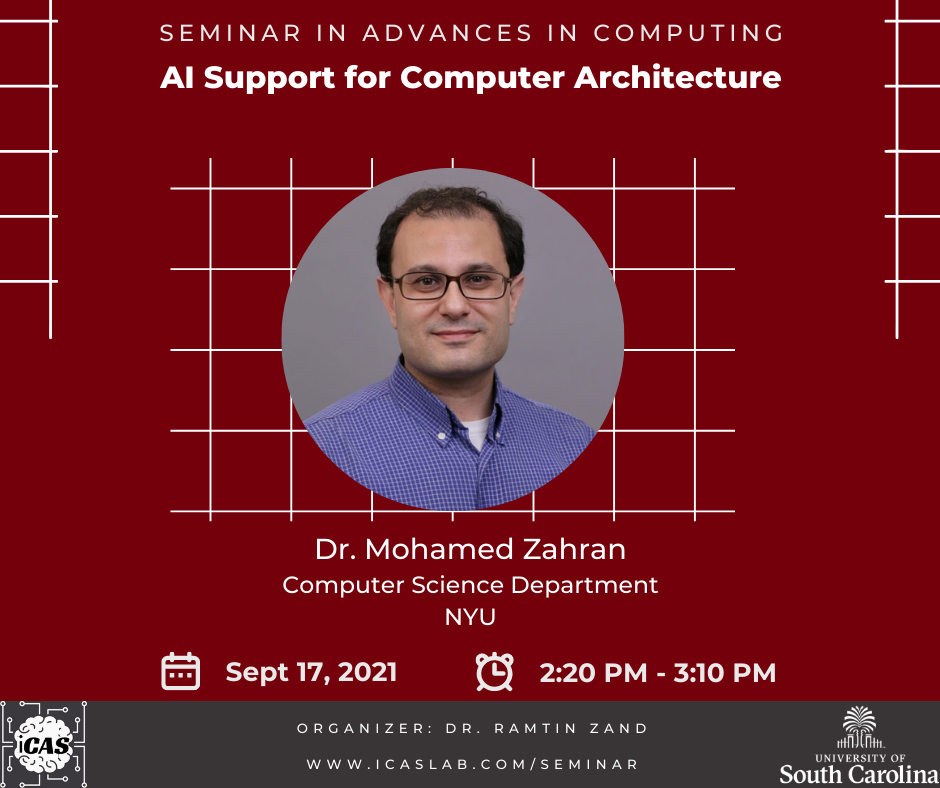

This week at the Seminar in Advances in Computing, we have an exciting talk by Dr. Mohammed Zahran from New York University. The talk will be focused on machine learning and adaptive computer architectures. Please use the below link if you are interested in joining the meeting virtually. Also, please share it with your students if you believe attending the talk will benefit them.

Meeting Location:

Storey Innovation Center 1400

Meeting Link (click me)

BIO: Mohamed Zahran is a professor with the Computer Science Department at Courant Institute of NYU. He received his Ph.D. in Electrical and Computer Engineering from University of Maryland at College Park. His research interests span several aspects of computer architecture, such as architecture of heterogeneous systems, hardware/software interaction, and biologically-inspired architectures. He served in many review panels in organizations such as DoE and NSF, served as PC member in many premiere conferences and as reviewer for many journals. He will serve as the general co-chair of the 49th International Symposium of Computer Architecture (ISCA) to be held in NYC in 2022. Zahran is an ACM Distinguished Speaker, a senior member of IEEE, a senior member of ACM, and a member of Sigma Xi scientific honor society.

TALK ABSTRACT: For the past decade people have been predicting the death of Moore's law, which is now as likely as ever both for technological reasons and for economical reasons. We have already kissed Dennard's scaling goodbye since around 2004. Given that, we are left with two options to get performance: parallel processing and specialized domain specific architectures (e.g. GPUs, TPUs, FPGAs, ...). But programs have different characteristics that change during the lifetime of a program's execution and across different programs. Both general purpose multicore and specialized architectures are designed with an average case in mind, whether this average case is for a general purpose parallel program or a specialized program like GPU-friendly ones, and this is anything but efficient in an era where power efficiency is as important as performance. Building a machine that can adapt to program requirements is necessary but not sufficient. In this talk we discuss the research path toward building a machine that learns from executing different programs and adapts for best performance when executing unseen programs. We discuss the main challenges involved: a fully adaptable machine is expensive in terms of hardware requirement and transferring learning from a program execution to another program execution is challenging.

Live Virtual Meeting Link

Meeting Location:

Storey Innovation Center 1400

BIO: Dr. Qi Zhang is an assistant professor of the Computer Science and Engineering department and the Artificial Intelligence Institute at the University of South Carolina. He got his Ph.D. from the Computer Science and Engineering department at the University of Michigan. His research aims for solutions for coordinating systems of decision-making agents operating in uncertain, dynamic environments. As hand-engineered solutions for such environments often fall short, He uses ideas from planning and reinforcement learning to develop and analyze algorithms that autonomously coordinate agents in an effective, trustworthy, and communication-efficient manner. In particular, He has been working on social commitments for trustworthy coordination, communication learning, and language emergence among coordinated agents and applications of (multi-agent) reinforcement learning such as intelligent transportation systems, dialogue systems, etc.

ABSTRACT: This talk will present two recent works on training homogeneous reinforcement learning (RL) agents in two distinct scenarios, respectively. The first scenario considers training a group of homogeneous agents that will be deployed in isolation to perform a single-agent RL task, which finds applications in ensemble RL. The first work develops effective techniques for training such as an ensemble of deep Q-learning agents, which help achieve state-of-the-art policy distillation performance in Atari games and continuous control tasks. The second scenario considers training a group of homogeneous agents to cooperatively perform a multi-agent RL task such as team sports. The second work develops novel techniques that exploit the homogeneity to train the agents in a distributed and communication-efficient manner.

See https://www.icaslab.com/Seminar

Meeting Link:

https://teams.microsoft.com/l/meetup-join/19%3ameeting_YWQ4NzcyYjMtYmZjNC00MTNjLTk4NTItYjRkOTFmYjk3NTRk%40thread.v2/0?context=%7b%22Tid%22%3a%224b2a4b19-d135-420e-8bb2-b1cd238998cc%22%2c%22Oid%22%3a%225fc2170a-7068-4a33-9021-df11b94ba696%22%7d

BIO: Dr. Xiaochen Guo is an associate professor in the Department of Electrical and Computer Engineering at Lehigh University. Dr. Guo received her Ph.D. degree in Electrical and Computer Engineering from the University of Rochester, and received the IBM Ph.D. Fellowship twice. Dr. Guo’s research interests are in the broad area of computer architecture, with an emphasis on leveraging emerging technologies to build energy-efficient microprocessors and memory systems. Dr. Guo is an IEEE senior member and a recipient of the National Science Foundation CAREER Award, the P. C. Rossin Assistant Professorship, and the Lawrence Berkeley National Laboratory Computing Sciences Research Pathways Fellowship.

ABSTRACT: General-purpose computing systems employ memory hierarchies to provide the appearance of a single large, fast, and coherent memory for general applications with good locality. However, conventional memory hierarchies cannot provide sufficient isolation for security workloads, support richer semantics, or hide memory latency for irregular memory accesses. This talk will present two of our recent works aiming to address these special needs in important workloads. In the first work, we propose to add a virtually addressed, set-associative scratchpad (SPX64) to a general-purpose CPU to support isolation and hash lookups in security and persistent applications. The proposed scratchpad is placed alongside of a traditional cache, and is able to avoid many of the programming challenges associated with traditional scratchpads without sacrificing generality. SPX64 delivers increased security and improves performance. In the second work, a software-assisted hardware prefetcher is proposed, which focuses on repeating irregular memory access patterns for data structures that cannot benefit from conventional memory hierarchies and hardware prefetchers. The key idea is to provide a programming interface to record cache miss sequence on the first appearance of a memory access pattern and prefetch through replaying the pattern on the following repetitions. By leveraging the programmer knowledge, the proposed Record-and-Replay (RnR) prefetcher can achieve over 95% prefetching accuracy and miss coverage.